Stable Video Diffusion represents a significant advancement in generative AI, enabling the creation of coherent video sequences from static images or text prompts. However, maintaining temporal consistency across frames remains one of the most challenging aspects of video generation. This article examines the mathematical foundations underlying temporal coherence mechanisms, analyzes common artifacts that emerge during generation, and presents cutting-edge research findings on improving frame-to-frame consistency through advanced latent space conditioning techniques.

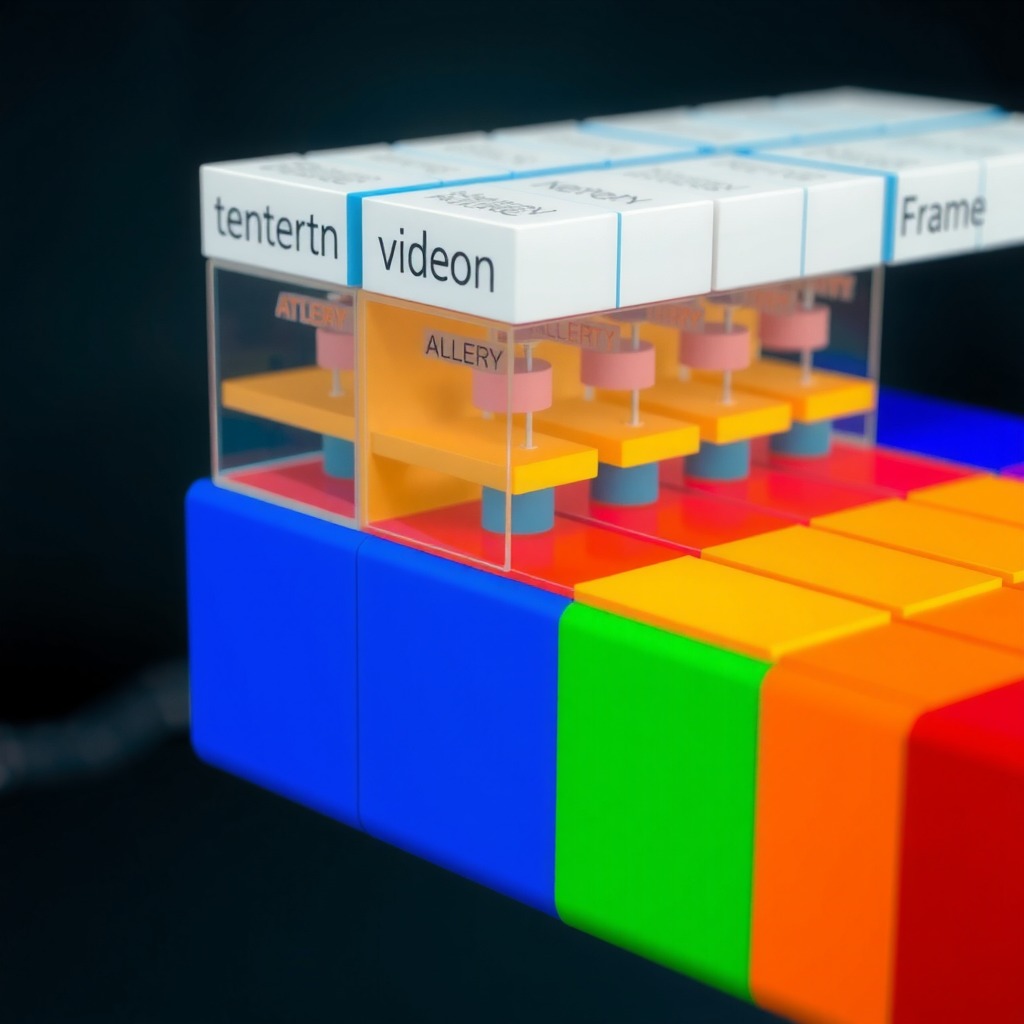

Understanding temporal coherence requires a deep dive into the architecture of diffusion models, particularly how they process sequential information and maintain consistency across the temporal dimension. Unlike image generation, where each output is independent, video generation must account for motion continuity, object persistence, and smooth transitions between frames.

Mathematical Foundations of Temporal Attention

At the core of temporal coherence in Stable Video Diffusion lies the temporal attention mechanism, which extends traditional self-attention to operate across the time dimension. The mathematical formulation begins with the standard attention equation, modified to incorporate temporal dependencies. For a sequence of frames F = {f₁, f₂, ..., fₙ}, the temporal attention mechanism computes attention weights that consider both spatial and temporal relationships.

Attention(Q, K, V) = softmax(QKᵀ / √dₖ)V

Temporal_Attention(Qₜ, Kₜ, Vₜ) = softmax((QₜKₜᵀ + Pₜ) / √dₖ)Vₜ

The temporal attention mechanism introduces positional encodings Pₜ that explicitly model the temporal distance between frames. This allows the model to learn that frames closer in time should have stronger correlations than distant frames. The positional encoding typically follows a sinusoidal pattern, enabling the model to extrapolate to sequence lengths not seen during training.

Recent research has demonstrated that incorporating learnable temporal embeddings alongside fixed positional encodings significantly improves coherence. These embeddings adapt during training to capture motion-specific patterns, such as the characteristic movement of water, cloth, or human gestures. The combination of fixed and learnable components provides both stability and flexibility in modeling temporal dynamics.

The attention weights computed through this mechanism create a temporal dependency graph, where each frame attends to relevant features in neighboring frames. This graph structure enables the propagation of consistent features across the sequence, ensuring that objects maintain their identity and appearance throughout the video. The strength of these connections is modulated by learned parameters that balance temporal consistency with the need for natural motion variation.

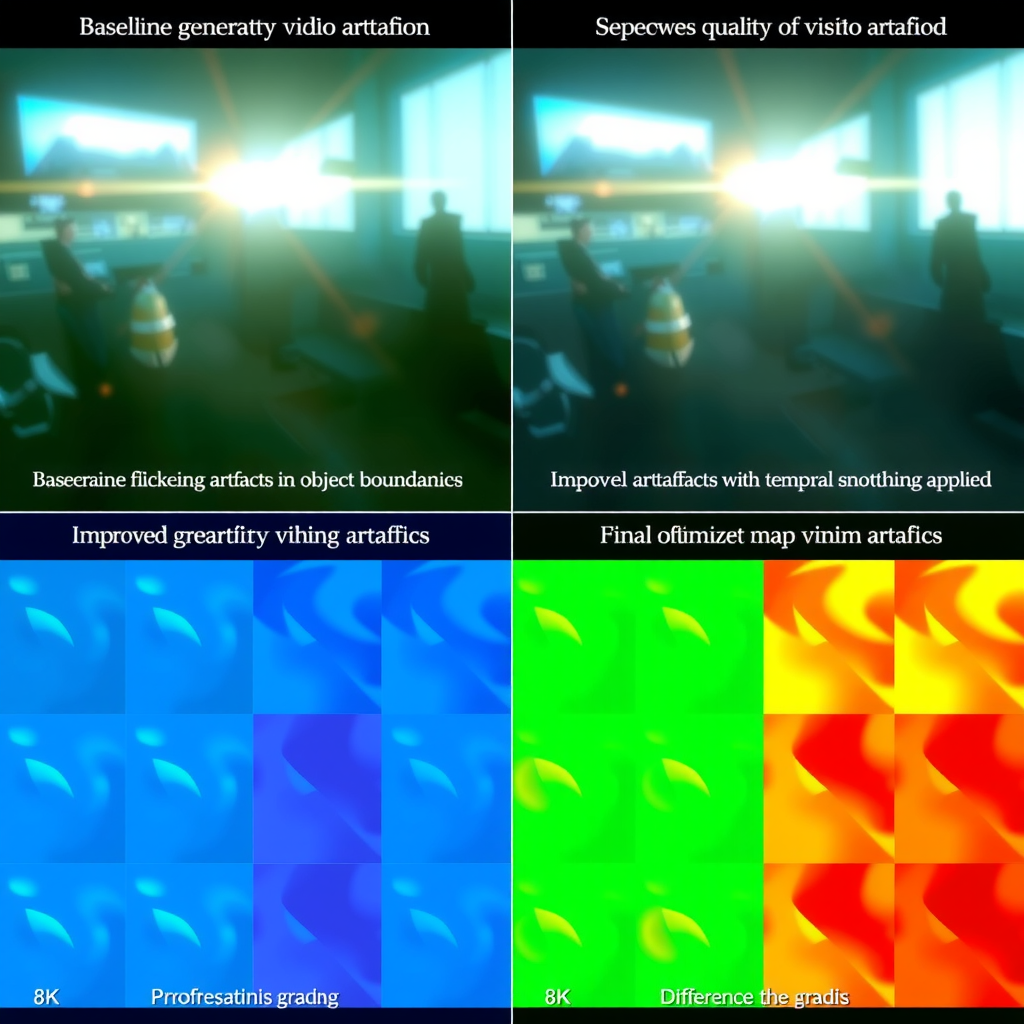

Analyzing Temporal Artifacts: Flickering and Morphing

Despite sophisticated attention mechanisms, Stable Video Diffusion systems frequently exhibit temporal artifacts that degrade visual quality and break immersion. The two most prevalent categories are flickering artifacts and morphing artifacts, each arising from distinct failure modes in the generation process. Understanding these artifacts requires examining both the mathematical properties of the diffusion process and the practical limitations of current architectures.

Flickering artifacts manifest as rapid, inconsistent changes in pixel values across consecutive frames, particularly in regions with fine details or complex textures. These artifacts typically occur when the denoising process at each timestep produces slightly different interpretations of ambiguous features. The mathematical root cause lies in the stochastic nature of the sampling process, where small variations in noise can lead to divergent predictions in subsequent frames.

Quantifying Flickering Intensity

Flickering can be quantified using temporal variance metrics. For a pixel location (x, y) across frames, the flickering intensity F(x,y) is computed as the variance of pixel values normalized by the mean: F(x,y) = Var(I(x,y,t)) / Mean(I(x,y,t)). High-frequency components in the temporal Fourier transform also indicate flickering, with energy concentrated above the Nyquist frequency of natural motion patterns.

Morphing artifacts represent a more severe form of temporal inconsistency, where objects gradually change shape, appearance, or identity across frames. Unlike flickering, which involves high-frequency noise, morphing occurs at lower temporal frequencies and often affects entire objects or regions. This phenomenon emerges when the latent space trajectory during generation drifts away from the manifold of consistent object representations.

Research has identified several contributing factors to morphing artifacts. First, insufficient temporal context in the attention window can cause the model to lose track of object identity over longer sequences. Second, mode collapse in the latent space can force the generation process to oscillate between different interpretations of the same scene element. Third, conflicting guidance signals from text prompts and visual conditioning can create ambiguity that manifests as gradual morphing over time.

Advanced Latent Space Conditioning Techniques

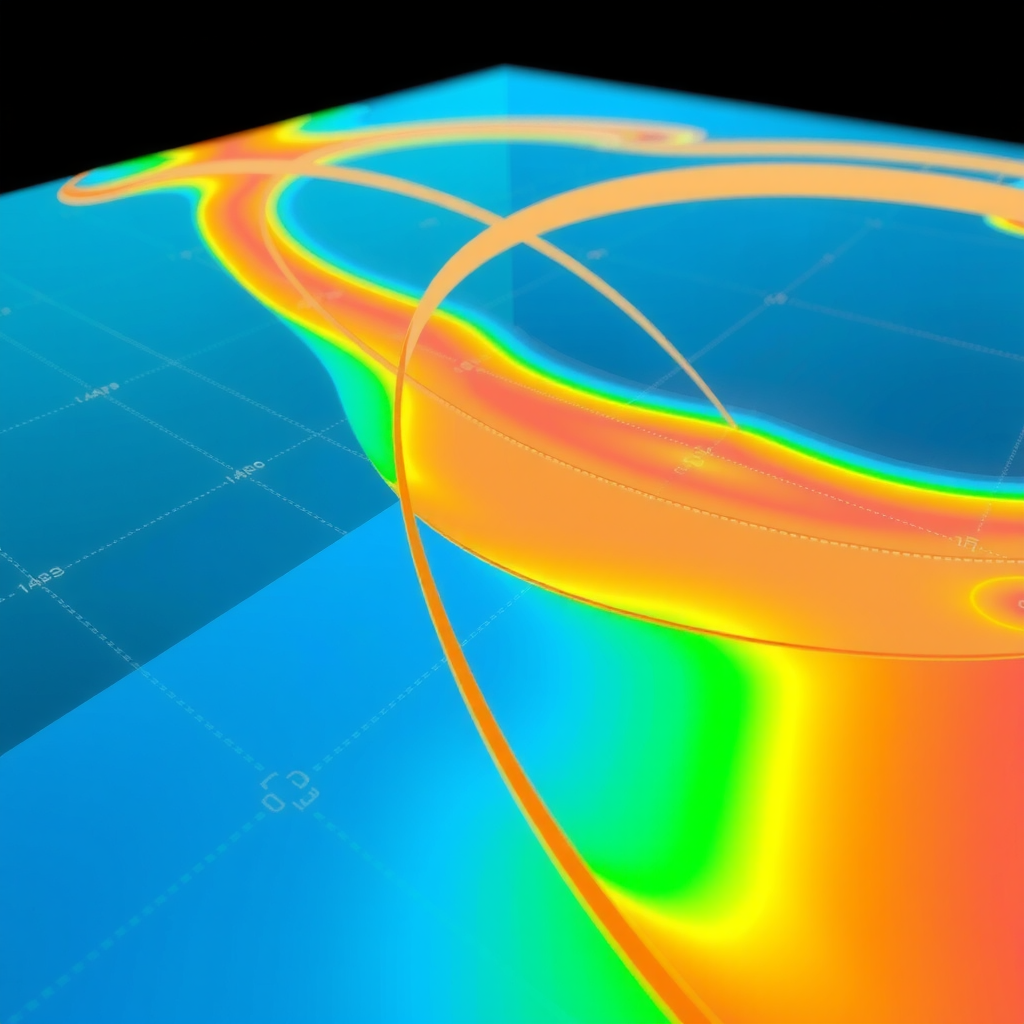

Recent advances in temporal coherence have focused on sophisticated latent space conditioning strategies that guide the generation process along smooth, consistent trajectories. These techniques operate on the principle that maintaining coherence in latent space naturally translates to coherence in pixel space, provided the latent representation is sufficiently expressive and well-structured.

One prominent approach involves trajectory regularization, where the latent representations of consecutive frames are constrained to lie on a smooth manifold. This is achieved by adding a regularization term to the training objective that penalizes large deviations in latent space between adjacent frames. The regularization strength is typically modulated by the expected motion magnitude, allowing for natural variation while preventing abrupt discontinuities.

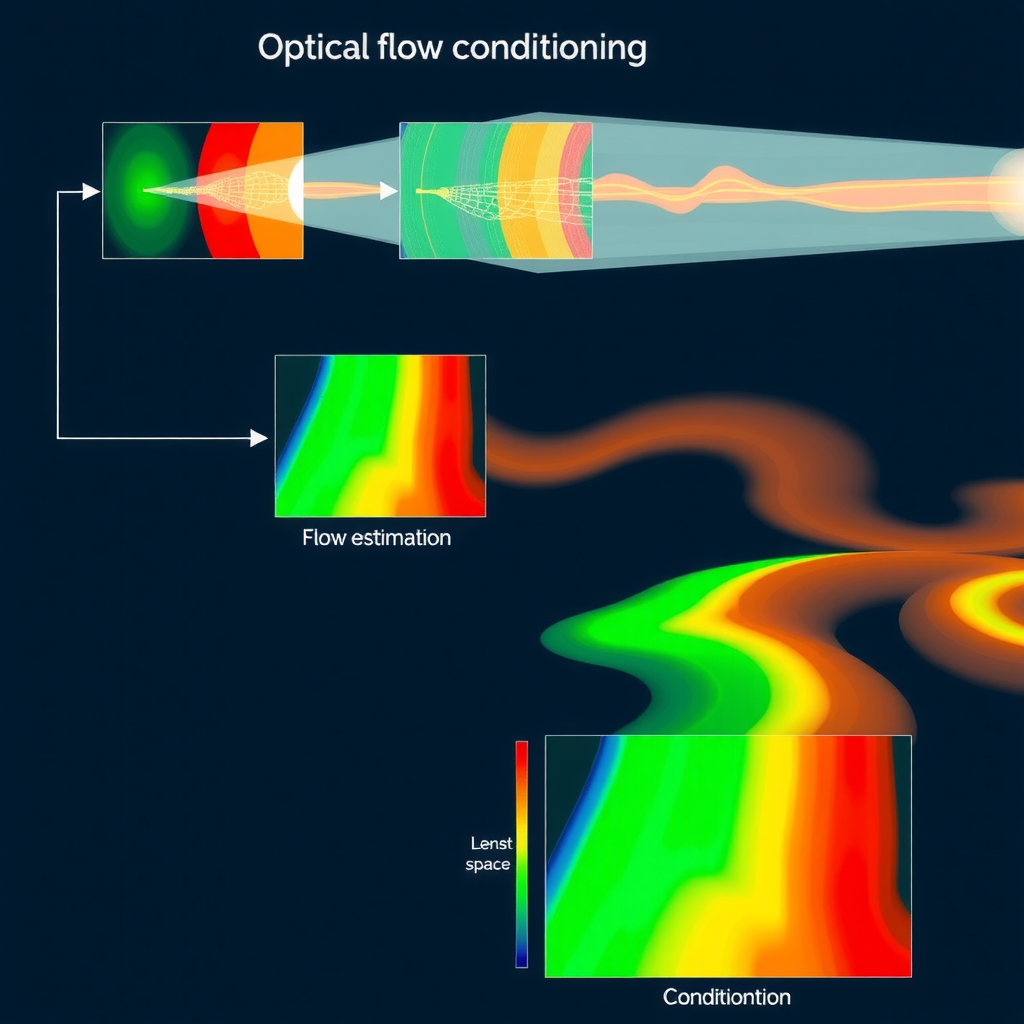

Optical flow conditioning represents another powerful technique for improving temporal consistency. By explicitly modeling motion between frames using optical flow fields, the generation process can be guided to produce pixel displacements that align with physically plausible motion patterns. This approach has shown particular effectiveness in scenarios involving camera motion or object translation, where the motion can be decomposed into coherent flow fields.

Key Insight:Combining trajectory regularization with optical flow conditioning creates a multi-scale consistency framework. Trajectory regularization ensures global coherence across the entire sequence, while optical flow conditioning maintains local consistency between adjacent frames. This hierarchical approach addresses both long-range and short-range temporal dependencies.

Cross-frame attention mechanisms have also evolved to incorporate learned temporal priors. Rather than treating all frames equally, these mechanisms learn to identify keyframes that serve as temporal anchors, with intermediate frames generated through interpolation in latent space. This keyframe-based approach reduces computational cost while maintaining high-quality temporal consistency, as the model can focus its capacity on generating coherent anchor points and smoothly interpolating between them.

Recent Research Findings and Experimental Benchmarks

Peer-reviewed studies published in 2024 have provided quantitative evidence for the effectiveness of various temporal coherence techniques. A comprehensive benchmark study comparing different conditioning strategies across multiple datasets revealed that hybrid approaches combining trajectory regularization, optical flow conditioning, and adaptive attention mechanisms achieve the best performance across diverse motion scenarios.

Quantitative metrics for temporal consistency have evolved beyond simple frame-to-frame similarity measures. Modern evaluation frameworks incorporate perceptual metrics such as LPIPS (Learned Perceptual Image Patch Similarity) computed across temporal windows, temporal Fréchet Inception Distance (t-FID), and specialized metrics for detecting flickering and morphing artifacts. These metrics provide a more nuanced assessment of temporal quality that aligns better with human perception.

Benchmark Results Summary

Experimental results demonstrate that the choice of conditioning strategy should be adapted to the specific characteristics of the generated content. For scenes with rapid motion or complex dynamics, optical flow conditioning provides the most significant improvements. For scenes with subtle motion or static backgrounds, trajectory regularization alone may suffice while reducing computational overhead. This finding has led to the development of adaptive conditioning frameworks that dynamically adjust their strategy based on motion analysis.

Long-sequence generation remains an active area of research, with recent studies exploring hierarchical generation strategies that decompose long videos into overlapping segments. These approaches maintain global coherence through shared latent representations while allowing for efficient parallel processing. Preliminary results suggest that hierarchical methods can generate sequences exceeding 1000 frames while maintaining consistency comparable to shorter sequences.

Motion Preservation Techniques and Future Directions

Preserving natural motion characteristics while maintaining temporal coherence represents a delicate balance in video generation. Motion preservation techniques aim to ensure that generated videos exhibit physically plausible dynamics, smooth trajectories, and realistic acceleration patterns. This requires the model to learn not just spatial appearance but also the temporal evolution of motion fields.

Physics-informed conditioning has emerged as a promising approach for improving motion realism. By incorporating physical constraints such as momentum conservation, gravity effects, and collision dynamics into the generation process, these methods produce videos that adhere to natural laws of motion. Implementation typically involves adding physics-based loss terms during training or using physics simulators to guide the sampling process.

Motion decomposition techniques separate camera motion from object motion, allowing for independent control and more coherent generation. By explicitly modeling the camera trajectory and object dynamics separately, the model can better maintain consistency in both aspects. This decomposition also enables novel applications such as camera path editing and object motion transfer between different scenes.

Temporal super-resolution represents an exciting frontier in motion preservation research. These techniques generate high-frame-rate videos from lower-frame-rate inputs by intelligently interpolating intermediate frames while maintaining motion coherence. Advanced approaches use learned motion models to predict plausible intermediate states rather than simple linear interpolation, resulting in smoother and more natural motion.

Future Research Directions

- Integration of 3D scene representations for improved geometric consistency

- Multi-modal conditioning combining text, audio, and motion capture data

- Adaptive temporal attention windows based on content complexity

- Real-time temporal coherence optimization for interactive applications

- Cross-domain transfer learning for motion patterns across different content types

Conclusion and Implications

Temporal coherence in Stable Video Diffusion represents a complex interplay of mathematical foundations, architectural design choices, and practical optimization strategies. The techniques discussed in this article—from temporal attention mechanisms to advanced latent space conditioning—demonstrate the rapid progress being made in addressing one of the fundamental challenges in AI video generation.

The reduction in flickering and morphing artifacts achieved through recent research has brought AI-generated video closer to production quality. However, significant challenges remain, particularly in long-sequence generation, complex motion scenarios, and maintaining consistency across diverse content types. The field continues to evolve rapidly, with new techniques and architectures emerging regularly.

For researchers and practitioners working with Stable Video Diffusion, understanding these temporal coherence mechanisms is essential for achieving high-quality results. The mathematical foundations provide insight into why certain artifacts occur and how to mitigate them, while the practical techniques offer concrete strategies for implementation. As the technology matures, we can expect to see increasingly sophisticated approaches that combine multiple conditioning strategies in adaptive frameworks.

The future of temporal coherence research lies in developing more efficient methods that maintain quality while reducing computational requirements, enabling real-time applications and longer sequences. Integration with other modalities, such as audio and 3D scene understanding, promises to unlock new capabilities and applications. As these technologies advance, the gap between AI-generated and natural video continues to narrow, opening exciting possibilities for creative expression and practical applications across numerous domains.