Stable Video Diffusion Research Articles

Explore our comprehensive collection of research papers, technical analyses, and educational resources on stable diffusion and video generation technologies. Stay informed about the latest developments in AI-powered video synthesis, diffusion models, and computational approaches to generative media.

Temporal Coherence in Stable Video Diffusion

An in-depth exploration of how Stable Video Diffusion maintains frame-to-frame coherence across generated sequences. This article examines the mathematical foundations of temporal attention mechanisms, discusses common artifacts like flickering and morphing, and presents recent research findings on improving consistency through latent space conditioning.

Read Article

Comparative Analysis of Video Generation Architectures

A comprehensive analysis comparing various diffusion-based video generation architectures including SVD, AnimateDiff, and other transformer-based approaches. The article presents quantitative metrics such as FVD scores, CLIP similarity measurements, and temporal coherence evaluations with reproducible testing protocols.

Read Article

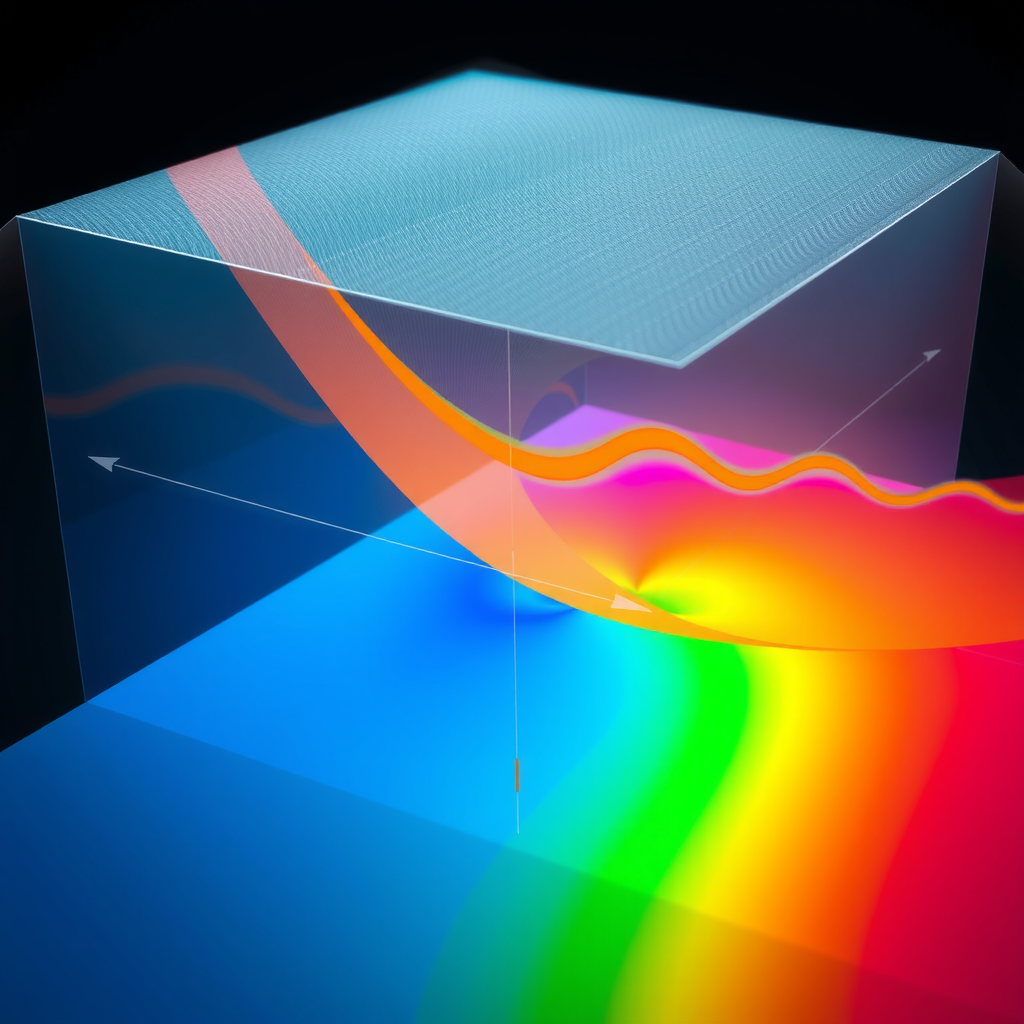

Advanced Latent Space Steering Techniques

This technical guide explores advanced methods for steering video generation through latent space interventions. Covers topics including semantic direction discovery, interpolation strategies, and conditioning approaches that enable precise control over motion, style, and content with code examples and mathematical formulations.

Read Article

Ethical Dataset Curation in Video Generation Research

An important discussion on responsible dataset curation and usage in video generation research. Examines licensing requirements, attribution practices, bias mitigation strategies, and privacy considerations when working with video training data. Provides guidelines for academic researchers and recommendations for transparent documentation.

Read Article

Computational Efficiency in Video Diffusion Models

A practical resource focused on computational efficiency improvements for video diffusion models. Discusses memory optimization techniques, batching strategies, quantization approaches, and hardware acceleration methods that enable researchers to work with limited computational resources. Includes performance benchmarks and profiling tools recommendations.

Read Article