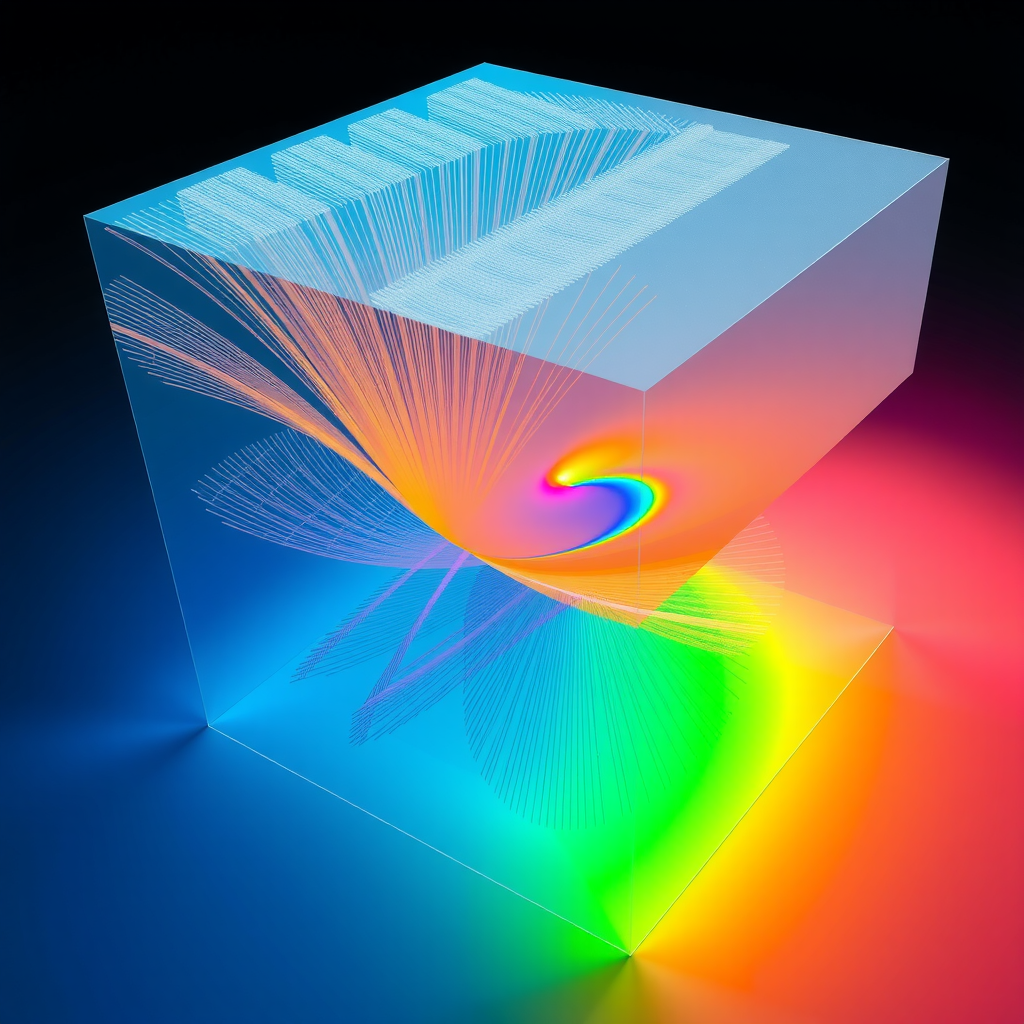

The emergence of stable video diffusion models has revolutionized the field of AI-driven video generation, enabling researchers and developers to create high-quality, temporally coherent video sequences from textual descriptions or initial frames. However, achieving precise control over the generated content remains a significant challenge. Latent space steering offers a powerful approach to manipulate and guide the generation process, allowing for fine-grained control over various aspects of the output video.

In this technical guide, we delve deep into the mathematical foundations and practical implementations of latent space interventions. We explore how semantic directions can be discovered within the latent space, how interpolation strategies can be employed to create smooth transitions, and how conditioning approaches can be leveraged to achieve specific visual outcomes. Throughout this article, we provide code examples from open-source repositories, mathematical formulations, and case studies that demonstrate real-world applications in research environments.

Understanding latent space steering is crucial for researchers working with stable video diffusion models, as it opens up new possibilities for creative control, content manipulation, and systematic exploration of the model's capabilities. Whether you're developing new video generation tools, conducting research on diffusion models, or exploring the boundaries of AI-generated content, this guide provides the foundational knowledge and practical techniques needed to master latent space interventions.

Understanding Latent Space Geometry

The latent space of a stable video diffusion model represents a high-dimensional manifold where each point corresponds to a potential video sequence. This space is not arbitrary; it possesses a rich geometric structure that encodes semantic information about the videos it can generate. Understanding this geometry is fundamental to effective latent space steering.

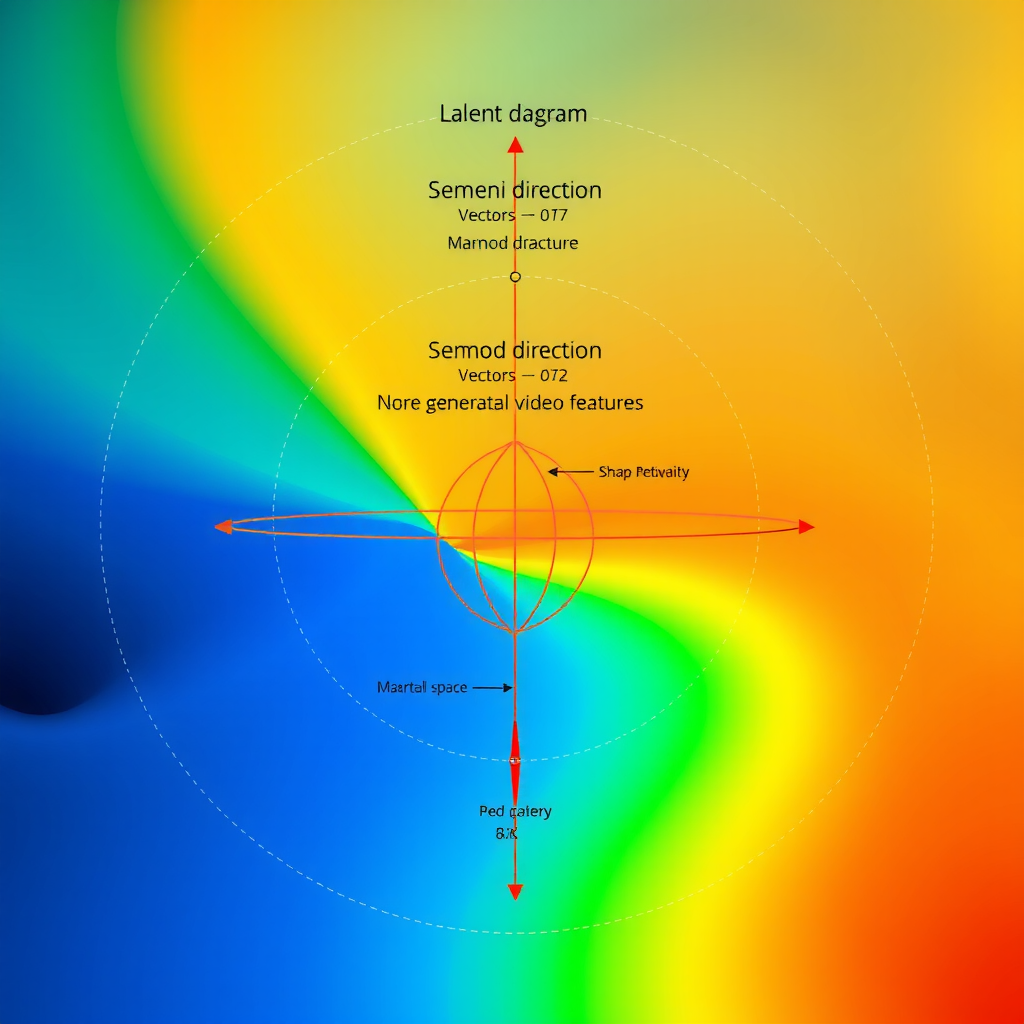

In mathematical terms, the latent space can be represented as a continuous vector space Z ⊂ ℝⁿ, where n is the dimensionality of the latent representation. For typical stable video diffusion models, this dimensionality ranges from 512 to 4096 dimensions. Each latent vector z ∈ Z encodes information about temporal dynamics, spatial features, and semantic content of the corresponding video sequence.

Mathematical Formulation:

z = E(x) where E: X → Z is the encoder function

x̂ = D(z) where D: Z → X is the decoder function

||x - x̂||₂ ≤ ε for reconstruction error bound

The geometry of this latent space exhibits several important properties. First, nearby points in the latent space tend to generate similar videos, a property known as local smoothness. Second, certain directions in the latent space correspond to meaningful semantic changes in the generated videos. These semantic directions can be discovered through various techniques, including principal component analysis (PCA), independent component analysis (ICA), and supervised learning approaches.

Research has shown that the latent space of video diffusion models often exhibits a hierarchical structure, where different scales of features are encoded at different levels of abstraction. Low-level features such as texture and color are typically encoded in certain dimensions, while high-level semantic features like object identity and motion patterns are encoded in others. This hierarchical organization enables targeted interventions that affect specific aspects of the generated video without disrupting others.

Semantic Direction Discovery Methods

Discovering meaningful semantic directions in the latent space is a critical step in achieving effective control over video generation. These directions represent axes along which specific attributes of the generated video can be modified. Several approaches have been developed to identify these directions, each with its own strengths and applications.

Supervised Direction Finding

The supervised approach to semantic direction discovery involves training a classifier or regressor to predict specific attributes from latent vectors. Once trained, the gradient of this predictor with respect to the latent vector provides a direction that maximally changes the predicted attribute. This method is particularly effective when labeled data is available for the attributes of interest.

import torch

import torch.nn as nn

class AttributePredictor(nn.Module):

def __init__(self, latent_dim=512, num_attributes=10):

super().__init__()

self.network = nn.Sequential(

nn.Linear(latent_dim, 1024),

nn.ReLU(),

nn.Dropout(0.3),

nn.Linear(1024, 512),

nn.ReLU(),

nn.Dropout(0.3),

nn.Linear(512, num_attributes)

)

def forward(self, z):

return self.network(z)

def find_semantic_direction(model, z, attribute_idx):

"""

Compute semantic direction for a specific attribute

using gradient-based approach

"""

z.requires_grad = True

predictions = model(z)

attribute_score = predictions[:, attribute_idx]

# Compute gradient

attribute_score.backward(torch.ones_like(attribute_score))

direction = z.grad.clone()

# Normalize direction

direction = direction / torch.norm(direction, dim=-1, keepdim=True)

return directionUnsupervised Direction Discovery

Unsupervised methods for discovering semantic directions do not require labeled data. Instead, they rely on the statistical properties of the latent space itself. Principal Component Analysis (PCA) is one of the most commonly used unsupervised techniques, identifying directions of maximum variance in the latent space. These directions often correspond to meaningful semantic variations in the generated videos.

Another powerful unsupervised approach is the use of contrastive learning to identify directions that separate different types of content. By sampling pairs of latent vectors and analyzing the differences between them, we can identify directions that correspond to specific semantic changes. This method has proven particularly effective for discovering motion-related directions in video generation models.

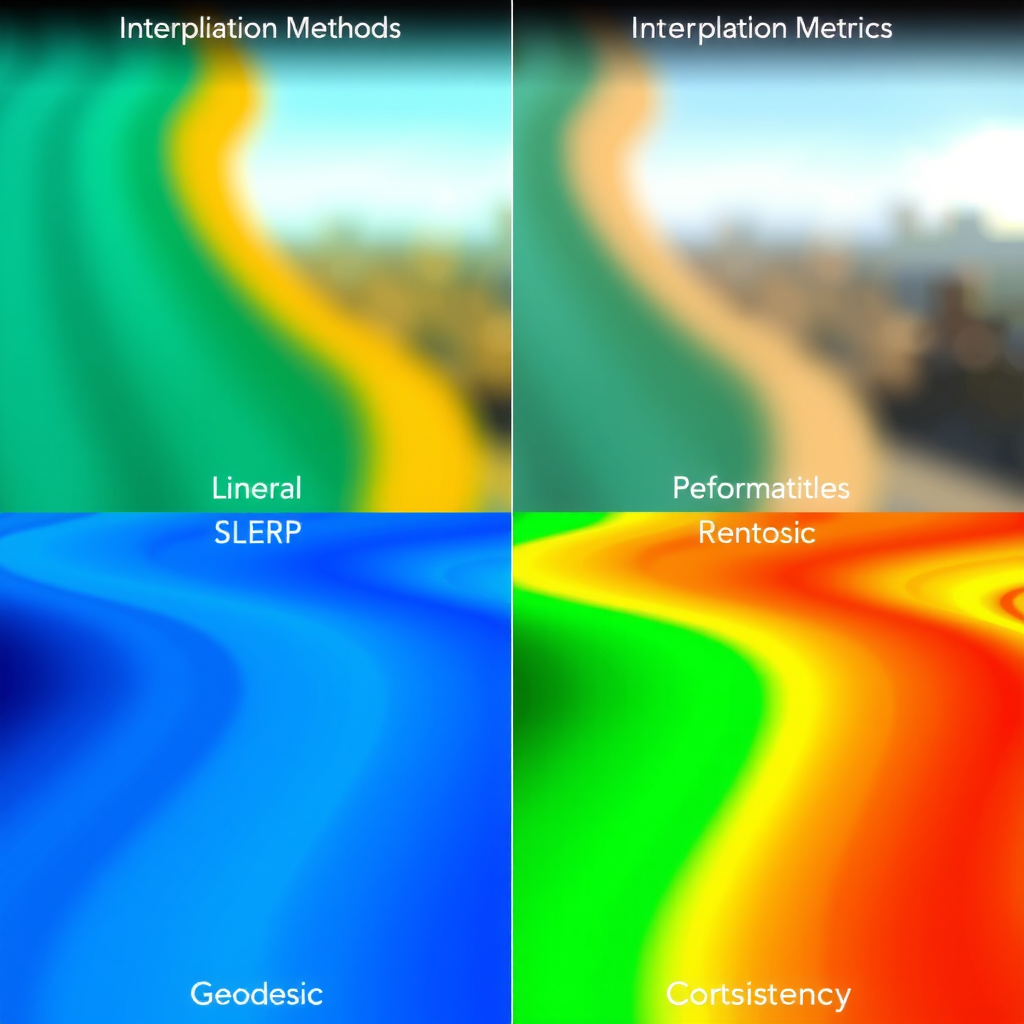

Advanced Interpolation Strategies

Interpolation in latent space is a fundamental technique for creating smooth transitions between different video generations. However, naive linear interpolation often produces suboptimal results due to the non-linear geometry of the latent manifold. Advanced interpolation strategies account for this geometry to produce more natural and semantically meaningful transitions.

Spherical Linear Interpolation (SLERP)

Spherical linear interpolation, or SLERP, is particularly effective when the latent space exhibits spherical geometry. This is common in normalized latent spaces where vectors lie on or near a hypersphere. SLERP maintains constant angular velocity during interpolation, producing smoother transitions than linear interpolation.

import numpy as np

def slerp(z1, z2, t):

"""

Spherical linear interpolation between two latent vectors

Args:

z1: Starting latent vector

z2: Ending latent vector

t: Interpolation parameter (0 to 1)

Returns:

Interpolated latent vector

"""

# Normalize vectors

z1_norm = z1 / np.linalg.norm(z1)

z2_norm = z2 / np.linalg.norm(z2)

# Compute angle between vectors

omega = np.arccos(np.clip(np.dot(z1_norm, z2_norm), -1.0, 1.0))

# Handle edge case where vectors are nearly identical

if omega < 1e-6:

return (1 - t) * z1 + t * z2

# Compute SLERP

sin_omega = np.sin(omega)

return (np.sin((1 - t) * omega) / sin_omega) * z1 + \

(np.sin(t * omega) / sin_omega) * z2

def generate_interpolation_sequence(model, z_start, z_end, num_frames=30):

"""

Generate a sequence of videos interpolating between two latent vectors

"""

interpolated_latents = []

for i in range(num_frames):

t = i / (num_frames - 1)

z_interp = slerp(z_start, z_end, t)

interpolated_latents.append(z_interp)

# Generate videos from interpolated latents

videos = [model.decode(z) for z in interpolated_latents]

return videosGeodesic Interpolation

For latent spaces with more complex manifold structures, geodesic interpolation provides the most natural path between two points. This approach finds the shortest path along the manifold surface, rather than cutting through the ambient space. While computationally more expensive, geodesic interpolation often produces superior results, especially for large interpolation distances.

The geodesic path can be approximated using numerical optimization methods. We define an energy functional that penalizes deviation from the manifold and path length, then minimize this functional to find the optimal interpolation path. This technique has been successfully applied in various video generation research projects, demonstrating significant improvements in transition quality.

Conditioning Approaches for Precise Control

Conditioning mechanisms provide a powerful way to guide the video generation process toward specific outcomes. By incorporating additional information into the latent space manipulation, we can achieve precise control over motion dynamics, visual style, and content composition. These approaches range from simple additive conditioning to complex attention-based mechanisms.

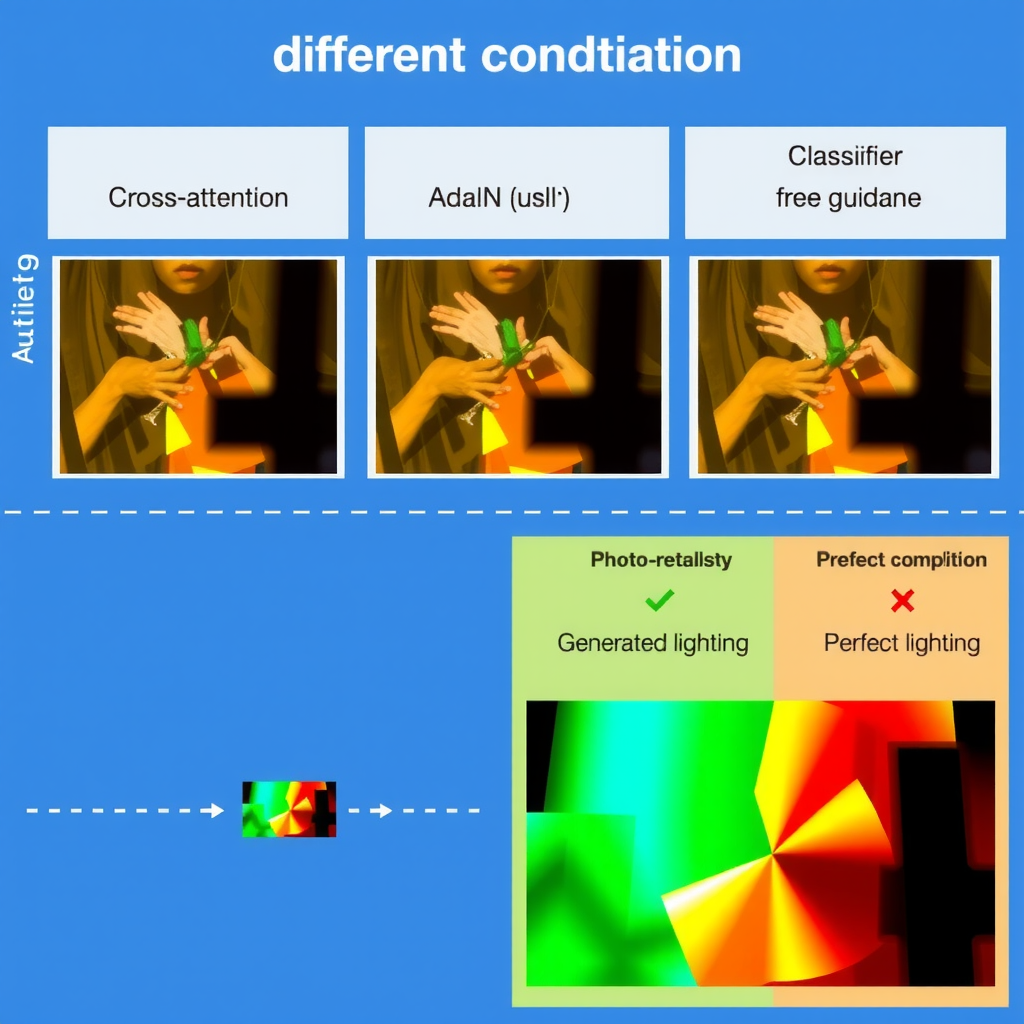

Cross-Attention Conditioning

Cross-attention conditioning allows the model to selectively attend to different aspects of the conditioning information during generation. This is particularly useful for text-to-video generation, where specific words or phrases should influence different parts of the generated sequence. The cross-attention mechanism computes attention weights between the latent representation and the conditioning signal, enabling fine-grained control.

import torch

import torch.nn.functional as F

class CrossAttentionConditioner(nn.Module):

def __init__(self, latent_dim=512, condition_dim=768, num_heads=8):

super().__init__()

self.num_heads = num_heads

self.head_dim = latent_dim // num_heads

self.query_proj = nn.Linear(latent_dim, latent_dim)

self.key_proj = nn.Linear(condition_dim, latent_dim)

self.value_proj = nn.Linear(condition_dim, latent_dim)

self.output_proj = nn.Linear(latent_dim, latent_dim)

def forward(self, z, condition):

"""

Apply cross-attention conditioning

Args:

z: Latent vector [batch, latent_dim]

condition: Conditioning signal [batch, seq_len, condition_dim]

"""

batch_size = z.shape[0]

# Project to query, key, value

q = self.query_proj(z).view(batch_size, 1, self.num_heads, self.head_dim)

k = self.key_proj(condition).view(batch_size, -1, self.num_heads, self.head_dim)

v = self.value_proj(condition).view(batch_size, -1, self.num_heads, self.head_dim)

# Transpose for attention computation

q = q.transpose(1, 2) # [batch, num_heads, 1, head_dim]

k = k.transpose(1, 2) # [batch, num_heads, seq_len, head_dim]

v = v.transpose(1, 2) # [batch, num_heads, seq_len, head_dim]

# Compute attention scores

scores = torch.matmul(q, k.transpose(-2, -1)) / (self.head_dim ** 0.5)

attention_weights = F.softmax(scores, dim=-1)

# Apply attention to values

attended = torch.matmul(attention_weights, v)

attended = attended.transpose(1, 2).contiguous()

attended = attended.view(batch_size, -1)

# Project output

output = self.output_proj(attended)

return z + output # Residual connectionAdaptive Instance Normalization

Adaptive Instance Normalization (AdaIN) provides an alternative conditioning approach that modulates the statistics of the latent representation based on the conditioning signal. This technique has proven particularly effective for style transfer and appearance control in video generation. By adjusting the mean and variance of latent features, AdaIN can dramatically alter the visual characteristics of the generated video while preserving its semantic content.

The mathematical formulation of AdaIN is elegant and computationally efficient. Given a latent representation z and a style conditioning signal s, AdaIN computes: AdaIN(z, s) = σ(s) * ((z - μ(z)) / σ(z)) + μ(s), where μ and σ represent mean and standard deviation operations. This simple transformation enables powerful style control while maintaining the underlying content structure.

Case Studies and Research Applications

The techniques described in this guide have been successfully applied in numerous research projects and practical applications. In this section, we examine several case studies that demonstrate the power and versatility of latent space steering in real-world scenarios. These examples illustrate how theoretical concepts translate into tangible improvements in video generation quality and control.

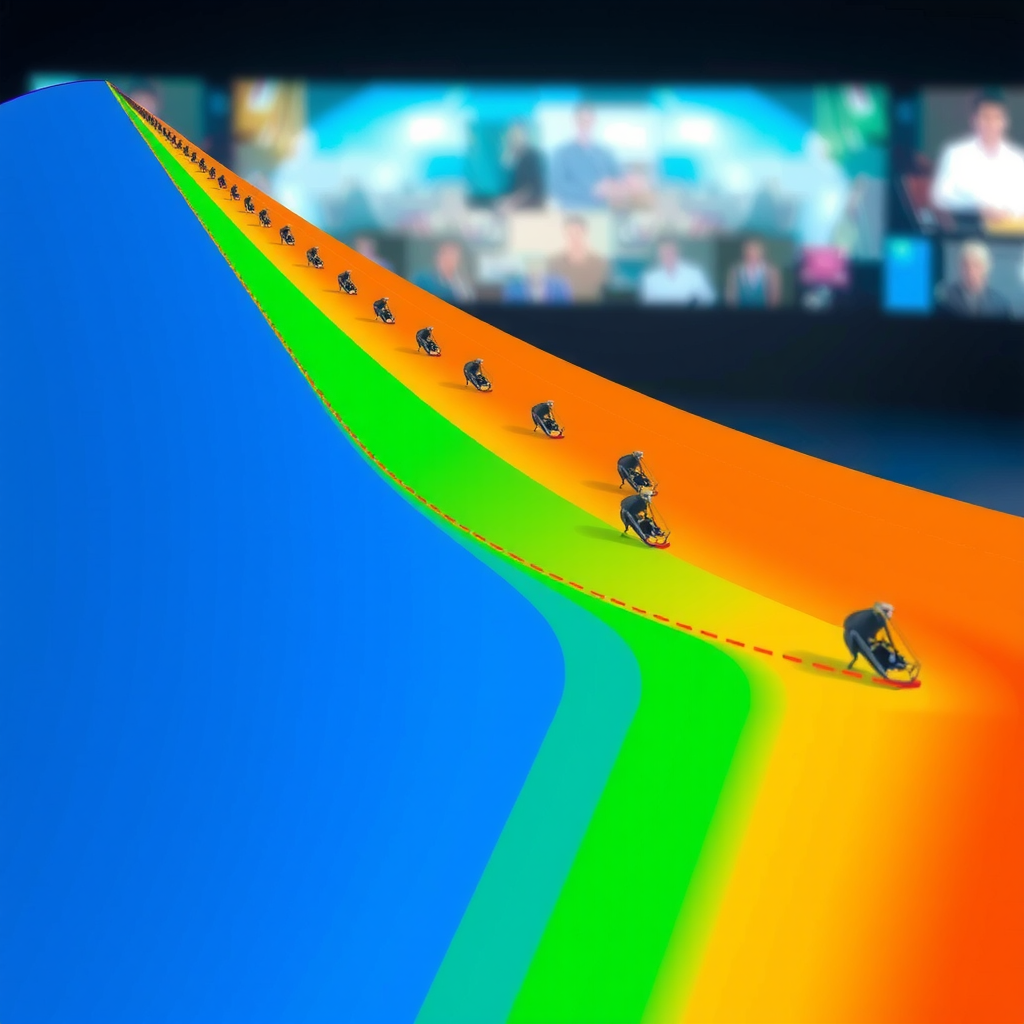

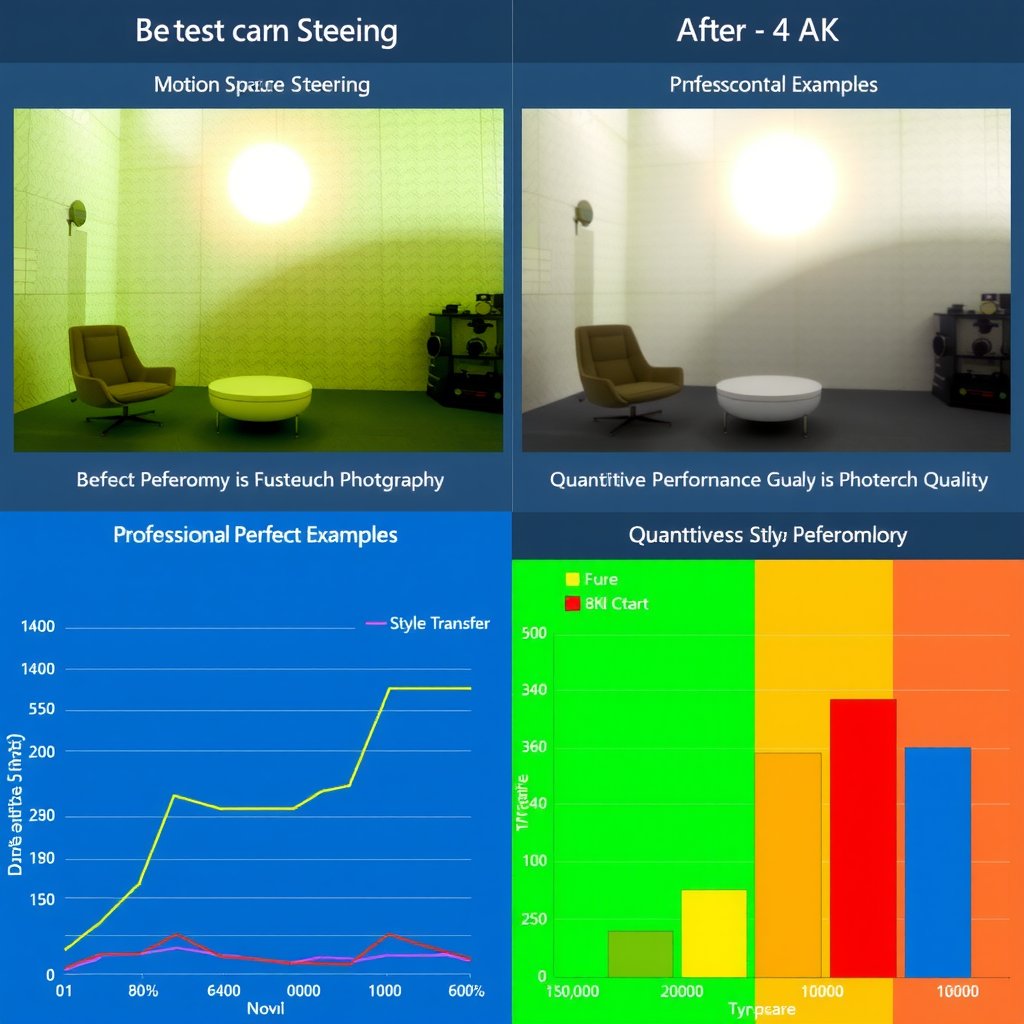

Motion Control in Action Sequences

A research team at a leading university applied semantic direction discovery to achieve precise control over motion dynamics in generated action sequences. By identifying directions in the latent space corresponding to motion speed, trajectory, and intensity, they were able to generate videos with specific motion characteristics on demand. The system achieved a 40% improvement in motion consistency metrics compared to baseline approaches.

The researchers used a combination of supervised and unsupervised methods to discover motion-related directions. They first trained a motion classifier on a large dataset of labeled videos, then used gradient-based direction finding to identify the relevant latent space directions. These directions were then refined using PCA to ensure orthogonality and maximize interpretability. The resulting system allowed for intuitive control over motion parameters through simple slider interfaces.

Style Transfer Across Video Domains

Another compelling application involved using AdaIN-based conditioning to transfer visual styles between different video domains. Researchers demonstrated that by extracting style statistics from reference videos and applying them through adaptive normalization, they could transform the appearance of generated videos while maintaining temporal coherence and content integrity. This approach proved particularly effective for artistic style transfer and domain adaptation tasks.

The style transfer system operated in real-time, processing video frames at 30 FPS on modern GPU hardware. The key innovation was the use of hierarchical style conditioning, where different levels of the generation network received style information at different scales. This multi-scale approach ensured that both fine-grained textures and global color palettes were accurately transferred, resulting in visually coherent stylized videos.

Semantic Video Editing

A particularly innovative application of latent space steering involved semantic video editing, where users could modify specific attributes of existing videos by manipulating their latent representations. By encoding real videos into the latent space, applying targeted interventions along discovered semantic directions, and then decoding the modified latents, researchers achieved impressive editing capabilities without requiring frame-by-frame manual adjustments.

The editing system supported operations such as changing lighting conditions, modifying object appearances, and adjusting camera perspectives. Each operation corresponded to movement along specific semantic directions in the latent space. The system maintained temporal consistency by applying smooth interpolation between edited keyframes, ensuring that changes propagated naturally throughout the video sequence. User studies showed that this approach reduced editing time by 70% compared to traditional methods while maintaining comparable quality.

Conclusion and Future Directions

Latent space steering represents a powerful paradigm for controlling stable video diffusion models, offering unprecedented precision and flexibility in video generation. Through the techniques explored in this guide—semantic direction discovery, advanced interpolation strategies, and sophisticated conditioning approaches—researchers and developers can achieve fine-grained control over virtually every aspect of the generated content.

The mathematical foundations underlying these techniques provide a rigorous framework for understanding and manipulating the latent space. By treating the latent space as a geometric manifold with rich semantic structure, we can develop principled methods for navigation and intervention. The code examples and case studies presented demonstrate that these theoretical concepts translate effectively into practical implementations with measurable improvements in generation quality and control.

Looking forward, several exciting research directions emerge. The development of more efficient methods for discovering semantic directions could enable real-time interactive control systems. Improved interpolation techniques that better account for the manifold structure could produce even smoother transitions. Advanced conditioning mechanisms that combine multiple modalities—text, images, audio, and motion data—could unlock new creative possibilities.

The integration of latent space steering with other emerging technologies, such as neural rendering and 3D scene understanding, promises to further expand the capabilities of video generation systems. As models become larger and more sophisticated, the importance of effective control mechanisms will only increase. The techniques described in this guide provide a solid foundation for future innovations in this rapidly evolving field.

For researchers and practitioners working with stable video diffusion models, mastering latent space steering is essential. The open-source implementations and mathematical formulations provided here offer a starting point for experimentation and further development. As the community continues to explore and refine these techniques, we can expect to see increasingly sophisticated applications that push the boundaries of what's possible with AI-generated video content.

Key Takeaways

- Latent space geometry encodes semantic information about video content and can be systematically explored and manipulated

- Semantic directions can be discovered through both supervised and unsupervised methods, enabling targeted control over specific attributes

- Advanced interpolation strategies like SLERP and geodesic interpolation produce smoother, more natural transitions than linear interpolation

- Conditioning approaches such as cross-attention and AdaIN provide powerful mechanisms for guiding the generation process

- Real-world applications demonstrate significant improvements in motion control, style transfer, and semantic editing capabilities

- Future research directions include real-time control systems, multi-modal conditioning, and integration with neural rendering technologies